Overview

Think of a Scenario (standard scenario) where one needs to execute a Batch job, and the team is moving away from conventional Systems into Cloud.

AWS Batch - comes as the answer - as this enables to easily and efficiently run hundreds of thousands of batch computing jobs with ease.

AWS Batch dynamically provisions the optimal quantity and type of compute resources (e.g., CPU or memory optimized instances) based on the volume and specific resource requirements of the batch jobs submitted.

With AWS Batch, there is no need to install and manage batch computing software or server clusters that you use to run your jobs. This lets you focus on analyzing results and solving problems, not managing infrastructure, and allowing you to focus on analyzing results and solving problems.

AWS Batch plans, schedules, and executes your batch computing workloads across the full range of AWS compute services and features, such as AWS Fargate, Amazon EC2, and Spot Instances.

Benefits of AWS Batch

Key benefits of AWS Batch are -

No need to install any software/upgrade or maintain software or configurations.

Works with multiple types of software including containers, microservices, custom software, or hosted servers or services.

Is dynamically scaled and is designed for cloud

It is efficient, easy to use, and designed for the cloud,

Because of its auto-scalability, AWS batch has the ability to run parallel jobs which can take a large amount of compute power, by leveraging elasticity and selection provided by Amazon EC2 and other services such as Amazon S3, SNS & DynamoDB.

We offer Cloud Infrastructure & its management as Service. Do feel free to ask us for more information at - info@bridgeapps.co.uk

AWS Batch Concepts

Job

A job is a unit of work - which could be any executable for e.g. a shell script, a microservice, or a container image - that is submitted to AWS Batch. Every job runs as an app which is Containerised, hosted on EC2, and executed with parameters specified in Job Definition.

One job can be dependent on the successful completion of other jobs and can invoke/reference other jobs to continue with the processing.

Every job definition includes a series of details for e.g.

Details of executable to be run

AWS Identity and Access Management (IAM) role to provide access to AWS resources,

Memory and CPU requirements

Container properties, mount points, environment variables or any other properties and scripts to be executed.

Queue

It's a queue for AWS Batch Jobs, once they are scheduled for execution on an EC2 Environment.

Each queue has a priority value associated. Jobs from the higher-priority queue are executed in priority when compared to jobs from a lower-priority queue.

Scheduler

is attached to a Job Queue, which details out the schedule of the job to be submitted to the job queue. By Default the scheduler is FIFO-based.

The scheduler ensures the right EC2 size is applied to the jobs, which will efficiently launch and terminate EC2 instances as needed.

AWS Batch Flow

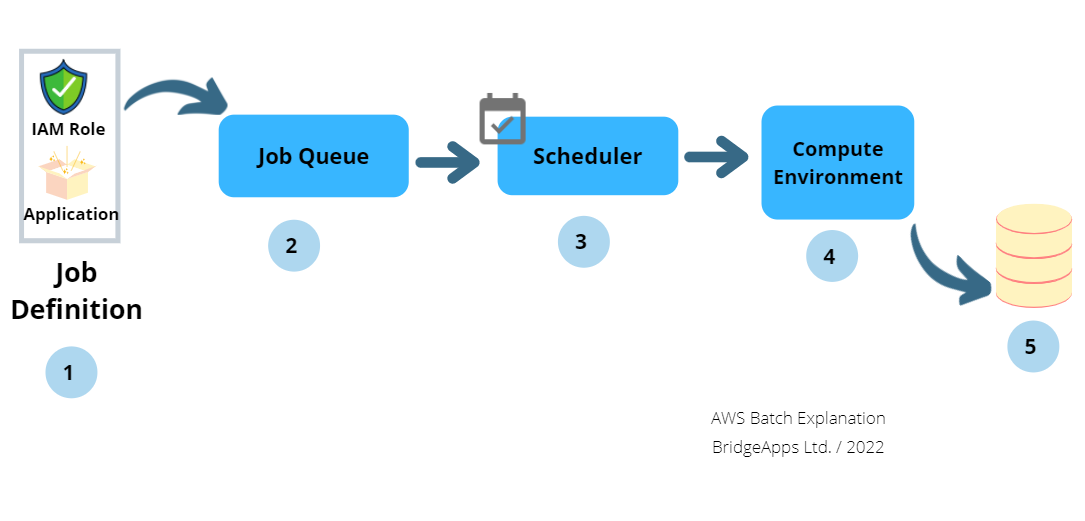

The below diagram shows the flow of events on configuring a batch job on AWS batch.

The user creates a Job Definition that includes:

Application image + configuration

IAM Role

User Submits the job to Batch Job Queue

Batch Scheduler - at the scheduled time - evaluates CPU, Memory, and GPU necessary for execution and launches Compute resources & environment

Batch Scheduler - places the job / Application instance on this compute environment. If there are multiple jobs being submitted at the same time, then scheduler priority & FIFO strategies are employed

Once finished, the job exits with status and writes the results to the user-defined storage.

Use of ECR / ECS with AWS Batch

AWS Batch provides the flexibility to store and consume job container images on ECR (elastic container registry) & ECS (elastic container service) services.

In other words, the containers are pulled natively from ECR and launched on ECS, with appropriate compute resources - without any extra steps.

If other container registries, e.g. Docker hubs are used, then additional steps for pull and authentication/ authorization are needed to be configured.

Summary

AWS Batch is a very convenient way to set up and launch batch jobs with minimal effort and maximum efficiency.

Tags

#cloud #aws #awsbatch #batch #integration #java #platform #platformservices #infrastructureascode #management #digital #automation

Author: Mubin Shaikh / BridgeApps Ltd

We at BridgeApps UK offer Infrastructure Management to our Customers, covering almost all aspects of DevOps & Cloud/ OnPremise Infrastructure. Do feel free to enquire more at - info@bridgeapps.co.uk

Do feel free to ask us for more information at - info@bridgeapps.co.uk